Benchmarks

There is no shame in being wrong, only in failing to correct our mistakes.

"High-Performance" is meaningless without Comparative Benchmarks

When, for the last time, have you seen a Web server's author publishing open-sourced comparative tests with 27 competitors?

- Apache (IBM)

- Apache Traffic Server (Yahoo!)

- IIS (Microsoft)

- GlassFish (Oracle)

- Tuxedo (Oracle)

- TntNet (Deutsche Boerse)

- Rock (Accoria)

- Tomcat (Apache Foundation)

- JBoss (Red Hat)

- Jetty

- Caucho Resin

- PlayFramework

- Lighttpd

- Nginx

- Varnish (the Web 'accelerator')

- OpenTraker

- Mongoose

- Cherokee

- Monkey

- Libevent

- Libev

- ULib

- Poco

- ACE

- Boost

- Snorkel

- AppWeb

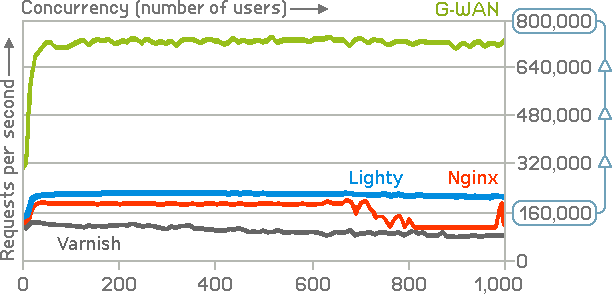

Let's have a closer look at Nginx and Lighttpd, the raising stars: (Varnish, "the Web accelerator", is included in this SMP weighttp test because an AB test made by Nicolas Bonvin was criticized for not using Varnish's "heavily threaded" architecture)

This test (tool, details and charts) was done on localhost to remove the network from the equation (a 1 GbE LAN is the bottleneck).

This is a 100-byte static file served on a 6-Core CPU by 6 server workers (processes for Nginx/Lighty, threads for G-WAN) and requested by 6 weighttp threads.

G-WAN v2.10.8 served 749,574 requests per second (Nginx 207,558 Lighty 215,614 and Varnish 126,047).

G-WAN served 3 to 7x more requests in 3 to 6x less time than the other servers:

Server Avg RPS RAM CPU Time for test ------ ------- --------- --------- ------------------------- Nginx 167,977 11.93 MB 2,910,713 01:53:43 = 6,823 seconds Lighty 218,974 20.12 MB 2,772,122 01:09:08 = 4,748 seconds Varnish 103,996 223.86 MB 4,242,802 03:00:17 = 10,817 seconds G-WAN 729,307 5.03 MB 911,338 00:28:40 = 1,720 seconds

And despite being the fastest, G-WAN used 1 to 4x less CPU as well as 2 to 45x less RAM resources (note for Varnish: that's the allocated memory amounts measured here, not the reserved virtual memory address space which does not deprive the system from any hardware resource).

When merely used as a static-content Web server, G-WAN (an application server) is 9 to 42x faster and uses much less CPU/RAM resources than all other servers ("Web server accelerators" like Varnish or mere Web servers like Nginx).

Guess how much wider the gap will be with 64-Core CPUs.

Dynamic Contents: Scripts vs. Compiled code

But G-WAN was written to have an application server, so it also supports 7 of the most popular scripted languages: Java, C#, C/C++, D and Objective-C/C++.

The success of scripts comes from the instant gratification that interpreters provide: at the press of a key your code executes instantly. Compare this with the compilation and linkage cycles of Apache, Lighttpd or Nginx modules (which require server stops & restarts).

But since low performance comes at a cost, interpreters left the scene to invite compilers (Facebook HipHop PHP => C++ translator).

G-WAN C scripts offer the best of both worlds: the convenience of scripts and the speed of compiled code.

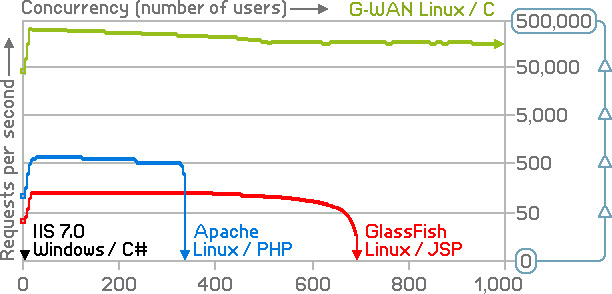

Comparing G-WAN C scripts / Apache + PHP / GlassFish + Java / IIS + C#

To evaluate the performance gap of poorly executed convenience, we invited the major scripted language vendors to review our version of the loan.c script ported in other language (see below) – and then we used an ApacheBench wrapper to test them all:

Note the exponential scale of the Requests-per-Second axis. This is a 100-year loan test with G-WAN v2.10 (older tests). Latencies:

G-WAN + C script ...... 0.4 ms ( 1x)

Apache + PHP ......... 12.6 ms ( 32x)

GlassFish + Java ......42.3 ms (106x)

IIS + C# ................ 171.8 ms (430x)

Seeing those results, some said that "G-WAN is faster only because it runs ANSI C scripts". This is obviously false: since G-WAN (serving static or dynamic contents) is faster than Nginx (serving static contents). G-WAN is just a better server.

When used as an application server G-WAN is 32 to 430x faster and uses much less CPU/RAM resources than all other servers (on the top of not dying as concurrency grows).

Anyway, Java is not ANSI C so to avoid being accused of "comparing apples to oranges" let's see how much faster G-WAN + Java can run as compared to Apache Tomcat – a server dedicated to Java.

Comparing G-WAN + Java to Apache Tomcat (a Java App. server)

Using the simplest possible Java script hello.java (instead of the loan-100 used above) to let Tomcat survive the experiment, G-WAN (loaded with a JVM weighting 20 MB RAM at startup) serves 10x more requests in 3.7x less time than Apache Tomcat while using 4.3x less RAM and 2.8x less CPU resources:

Avg RPS Elapsed Time for the Test RAM user / kernel / total (CPU jiffies)

--------- --------------------------- --------- -----------------------------------

G-WAN 759,726 00:27:28 = 1,648 seconds 46.90 MB 186,709 592,207 778,916

Tomcat 73,903 01:41:42 = 6,102 seconds 203.80 MB 1,582,074 630,299 2,212,373

This test "compares apples to apples": G-WAN + hello.java and Tomcat + hello.java. To do the same job, G-WAN + Java is 37x faster than Tomcat. And this is for a skinny "hello world", when Tomcat stops to respond under real-life jobs like the "loan" test above, then the costs are exponential: 750,000 RPS / 0 RPS = ∞

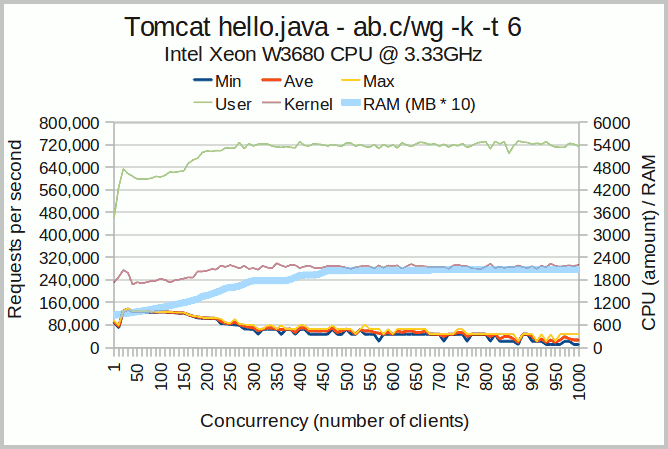

Tomcat + hello.java

Tomcat has a slow-start despite a couple of requests done before the test to warm-up the JVM. The user-mode and kernel CPU usage is remarkably high.

The memory usage is also growing wildly, closely correlated to the CPU usage (an obvious hint at a key point to enhance in Tomcat).

What is immediately visible is the dying scalability under a relatively modest concurreny load. The JVM is not the problem here as G-WAN was not slowed-down.

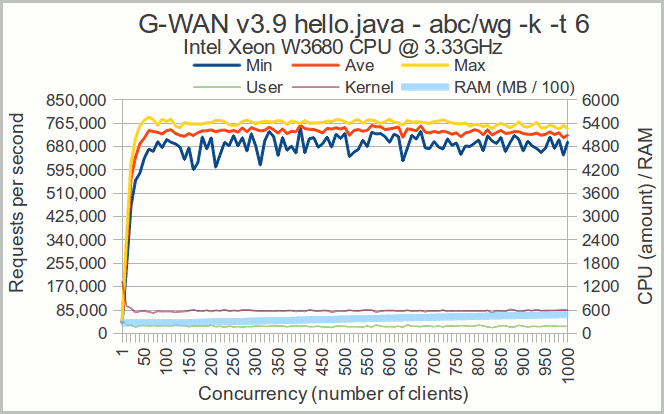

G-WAN + hello.java

G-WAN's performance and scalability are following a Requests per Second (RPS) slope correlated with a very low and almost constant CPU usage.

The memory usage is also very low and stable, contributing to a low user-mode overhead that lets the Linux kernel scale vertically as concurrency is growing.

The weight of the JVM is visible (an hefty 46.90 MB at the end of the test) but Tomcat is using 4 times more RAM.

Conclusion: If you have to use Java then G-WAN's vertical scalability can help you to save a lot on horizontal scalability which requires more hardware (servers, cooling systems, network equipment like switches and load-balancers), floor space, staff and electricity – a resource which cost is expected to double in the next decade.

Calling G-WAN C scripts to the rescue

We have seen above that G-WAN makes Java fly much higher than Apache Tomcat. But if some critical code must perform and scale better then G-WAN C scripts will give you the boost you need. G-WAN + hello.c is now serving more requests in less time while consuming 5.5x less RAM and 3.5x less CPU resources than G-WAN + hello.java:

Avg RPS Total Elapsed Time RAM user / kernel / total (jiffies)

--------- ----------------------- ---------- -------------------------------

G-WAN hello.c 801,58500:25:51 = 1,551 seconds 8.60 MB 182,280 563,701 745,981

G-WAN hello.java 759,726 00:27:28 = 1,648 seconds 46.90 MB 186,709 592,207 778,916

Tomcat hello.java 73,903 01:41:42 = 6,102 seconds 203.80 MB 1,582,074 630,299 2,212,373

Nginx 100.html 167,977 01:53:43 = 6,823 seconds 11.93 MB 1,000,270 1,910,443 2,910,713

G-WAN 100.html 826,821 00:25:16 = 1,156 seconds 9.21 MB 155,884 563,147 719,031

You read well. G-WAN + hello.c process 11x more requests in 4x less time while they are consuming 24x less RAM and 3x less CPU resources than Apache Tomcat.

Right, unlike the test above we are now "comparing apples to oranges" (C vs Java) but G-WAN C scripts can be useful as they do the same job 44x faster than Apache Tomcat... for a mere "hello world" test.

G-WAN C scripts

With a lower C runtime overhead than the JVM tested above, G-WAN's latency is visibly lower and less variable while performance and scalability are higher.

Memory usage is now lower and more constant, like the tiny user-mode usage which (a) leaves the resources available for the kernel and (b) preserves CPU caches.

The small-footprint C runtime makes marvels for low-latency tasks which require the most efficient solution.

The "hello world" program does very little, but the little it does illustrates how much more efficient ANSI C is as compared to Java. And the gap is much higher with more substantial tests than a simple "hello world". That difference is paid by end-users.

Whether you are running one single Web server or a Big Data server farm then G-WAN is your best option to make significant savings and to offer the most extraordinary performance without eye-waterering investments – and their recurring costs.

G-WAN faster (as an App. server) than Nginx (serving static files)

In the light of the tests above, it is now obvious that not all script engines are equal – even without concurrency. But the same goes for server technologies:

.c.png)

G-WAN serves 350,034 100-year loans per second.

G-WAN serves this 131.4 KB dynamic page faster than the leading Web servers can serve a mere 100-byte static file: Nginx 207,558 and Lighty 215,614, see the first chart at the top of this page.

G-WAN Web apps perform better than tiny static files served by Nginx!

When properly used, multi-Cores are great and will really let you save money!

To sum it up: using the least efficient technologies comes at an hefty (recurring) cost.

Comparing G-WAN to Nginx, Lighttpd, Varnish and Apache Traffic Server (ATS)

Using a low-end Intel Core i3 laptop and a 100-byte static file, an independent expert [1] of the EPFL's Distributed Information Systems Laboratory has evaluated the performance of the best Web servers (and "Web server accelerators" like Varnish and ATS) by using this open-source ApacheBench (AB) wrapper:

The authors of Nginx and Varnish have participated to this study (click the chart to read it).

They helped to tune their server: Igor went as far as to ask new benchmarks for the latest version of Nginx and then, when Nginx v1.0 had no effect, to ask for a re-built, features-stripped-down, Nginx in an attempt to catch with G-WAN's performance.

Varnish provided several versions of its configuration file and, like Igor for Nginx, requested several benchmarks to be done.

Their efforts did not make any difference: G-WAN (without tuning and with more features than all others) is just much faster.

This test is less impressive than the SMP tests above because ApacheBench (AB) is a single-threaded client (which cannot saturate SMP servers like G-WAN).

Note that G-WAN v2.1, the version tested by Nicolas Bonvin, was 4x faster (serving more requests in less time) and used twice less CPU resources than Nginx – but it used more memory. This pushed us to at least equal Nginx's feat: G-WAN v2.8+ uses less memory than Nginx (while offering many more features like a 'wait-free' KV store – the first of its kind – as well as Java, C#, C/C++, D and Objective-C scripts to generate dynamic contents).

Apache / Cherokee / G-WAN / Lighttpd / Litespeed / Nginx / Varnish on 8-Core

More recently, using a high-end dual Intel 4-Core server, an indepedent sysadmin has compared the performance of the best Web servers (and cache like Varnish) CPU Core by CPU Core:

This test used a virtualization layer and the kernel option were probably not optimized to tune the TCP/IP stack.

But, hey, one thing is crystal clear: only one single Web server scales on multicore – and that's G-WAN.

In this test G-WAN v3.3 was tested (G-WAN v3.8 is 100k req/sec faster, scales better and v3.10 fixed a NUMA affinity bug for even better results).

This means that people will not have any reason to buy 32 or 64-Core servers – unless they are using G-WAN.

Click the chart above to read the blog post which contains many charts and the configuration of all servers.

Why localhost tests are relevant?

We have all read that "benchmarks on localhost do not reflect reality".

Sure, there is no substitute to a 100GbE network of tenths of thousands of inter-connected machines driven by human users available to test your Web application each time you need it. But not everybody can afford this kind of tests.

The most relevant substitute is localhost: without bandwidth limits you will test the server rather than the network (tuned by "PR" benchmarks with OS kernel patches, multi-homed servers using arrays of 10 Gbps NICs and tuned drivers, high-end switches, etc.).

Everybody has access to same (free and standard) localhost.

A test that everybody can duplicate has certainly some value – especially if your goal is merely to compare how different HTTP servers behave under heavy loads (CPU, memory usage and performance: requests per second).

With 400 GbE networks in the works, the question of how fast servers can run becomes crucial.

Why CPU load matters?

When we will have 1,000-Core CPUs with address bus saturation resolved, the Linux kernel will make a machine hundreds of times faster. And G-WAN will be much faster without requiring any modification. This is because G-WAN/Linux scales on multi-Core systems while using little CPU resources (see the first chart of this page).

For many parallelized Web servers, the limit that prevents them from scaling is their own user-mode code rather than the kernel or CPU cache probing issues.

A high CPU usage without performance just reveals how much fat a parallelized process is dragging. Reasons range from bloated implementations to inadequate designs, both being increasingly visible as clients concurrency grows (but the latter bites even harder).

Researchers have used existing software like IBM Apache to experiment with diverse paralellized strategies, locality and cache updates. What contributed to limit the range of their experimentations is the overhead of the user-mode code used to measure more subtle interactions. Research will clearly need to focus on implementation to get relevant results with design issues.

Conclusion: 1,000-Core CPUs will make very few Web servers (and even less script engines) fly higher.

Why memory footprint matters?

Whether you are hosting several Web sites or need to run several applications on a single machine (or just want to get the best possible performance) then using as little RAM as possible helps.

This is because: (a) accessing system memory is immensely slower than accessing the CPU caches and (b) those caches have a necessarily limited size.

The less you are using memory, the more your code and data have chances to stay loaded in the fast CPU caches – and the faster your code executes.

Further, virtual servers necessarily offer small amounts of RAM as they share a physical machine between many users. Here, having a small memory footprint is a question of survival to avoid the deadly disk-swapping trap.

At 150 KB, G-WAN leaves plenty of space for your code and data – less the monthly security holes.

[1]: We first learned about Nicolas Bonvin on the G-WAN forum, where (using the nickname "svm" and signing "DT") he posted a question about Varnish. We have not been related to him whether it was before, during or after his tests.