ApacheBench & HTTPerf

People's beliefs and convictions are almost always gotten at second hand, and without examination.

HTTP benchmark tools: Apache's AB, Lighttpd's Weighttp, HTTPerf, and Nginx's "wrk"

If you believe in the merits of making your own opinion and want to test a server or a Web application, then this page may help you: we wished we could find such a resource when we were facing a blank page in year 2009, but very few benchmarks bother to document what they do – and even fewer explain why they do things a way or another.

This information is for Linux. We have left the Windows world (after 30 years of monoculture) after we have discovered in 2009 how much better G-WAN performs on Linux.

What to avoid – and why

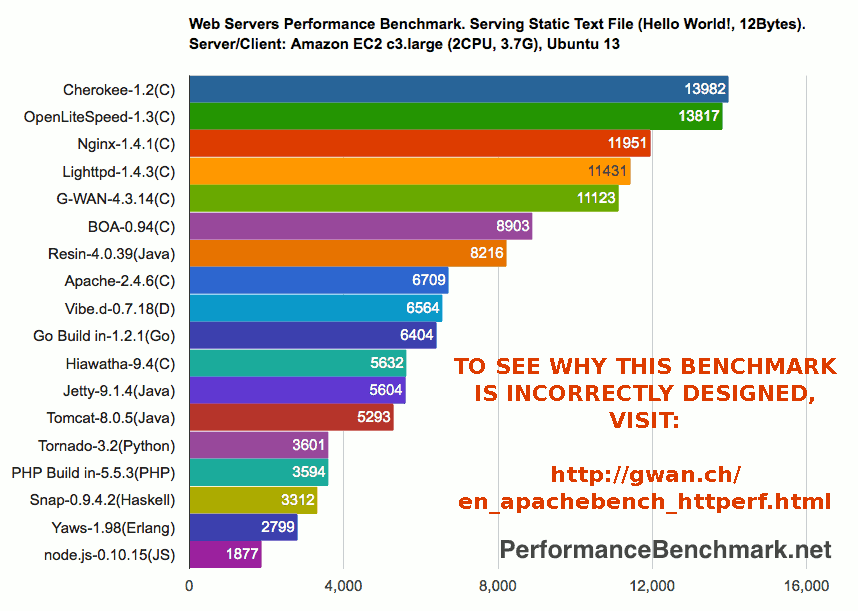

The web is used by more and more people. Many webmasters "copy and paste" scripts from blogs without understanding what they do. Things get worse when that's a startup or a consultant without the time and resources to do the necessary homework. The example below is real:

Let's start with the most obvious:

Here, 2 high-end 2014 high-performance CPUs serve less than 15k requests/second... while G-WAN is 10x faster on one single 2011 laptop CPU (an Intel Core i3)... with a 10x larger static file!

The test on the left is done anonymously on a blog. But the test showing 200x higher performances is done by an academic expert working in the "distributed systems" laboratory of a prestigious university.

How can a test be wrong by more than a factor 200 (2x10x10)? This is what we explain on this page.

Know what you test

The HTTP Server

Most Web servers downloads come with a tiny "it works" static HTML page. It's not the case with G-WAN because G-WAN is an application server, it supports 17 languages and demonstrates them all with a rich API in the archive you have downloaded.

As a result, G-WAN loads the Java virtual Machine, the C# Virtual Machine, the PH7 Virtual Machine, the C runtime, the C++ runtime, the Objective-C runtime, and so on. All of them create a memory footprint of tens of megabytes – to which are added by default all G-WAN example scripts (init script, maintenance script, servlets, connection handlers, content-type handlers, and protocol handlers) to let people quickly try G-WAN's features.

So, before you make a benchmark, or attempt check G-WAN's memory footprint, make sure you are disabling everything what you don't want to use in your test. Enable or disable log files – for all the servers you compare. And before you conclude that the tests done on this site are irrelevant, drop us a line so we can help you to check that are are doing it right.

This is what we have done with all the tests published on this site: we have sent our test procedures, our results, and asked the authors of each server to make suggestions. Sometimes, like with ORACLE Glassfish, we have allowed them to multiply the performances of their server by more than a factor two by just making suggestions to slightly tune their code.

And, please, before all that, read this web page. You will most probably learn useful things. The kind of technical insights that made it possible for us to make G-WAN.

The Network

Testing web servers via the Internet is a common mistake. People believe that "it's a real-life test" but this is wrong because the network is the bottleneck and then you are not testing the web server. Instead, your are testing:

- the network latency

- the network bandwidth

- the network quality of service.

An ADSL link (usually ranging in the 5-100 Mpbs) will not saturate a web server like G-WAN which can fully use a 5,000 Mbps fiber link with a single multicore CPU.

OK, but with 50 ADSL links @ 100 Mbps then a test would really saturate G-WAN, right?

Wrong.

Latency (the delay between consecutive IP packets) is the bottleneck:

Even a 1 Gigabit LAN would make G-WAN look almost as slow as Nginx. You need a 40/100 Gigabit LAN (with tuned OS kernels, optimized drivers, hight-end switches, etc.) to really saturate a web server like G-WAN.

For those who do not have access to such a test bed, there's a cheap, "optimal" solution available on every single computer. It offers the best available bandwidth and latency your web server, OS and CPU can provide (if properly configured). It's called localhost.

Some will (too) quickly conclude that then there's no advantage at using a fast web server if the limit is the network. This is not true because not all web servers can scale – especially when generating dynamic contents. Here, the difference between a bad and a good server will be life and death.

The Payload

Keep in mind that Web servers do NOT receive or send data. The OS kernel is doing it.

So, when you are serving a large file (a file that requires many TCP packets, each packet being 1,500 bytes in size) then you are testing the OS kernel rather than the Web server.

Nginx speed tests rely on an empty HTML file like 0.html. As Nginx caches file metadata (file size, date/time, etc.) such a test gives it an advantage as compared to other servers. Note that, because of a better userland program architecture and implementation, G-WAN is faster than Nginx, even with 0.html – and the G-WAN cache does not store empty files so it doesn't matter if it's enabled or not (by default caching is disabled in G-WAN v4.10+, see gwan/init.c to enable it).

To be more relevant, benchmarks aimed at measuring the efficiency of a Web server should use an embedded resource (like the Web 'bacon' of Nginx or G-WAN: a 43-byte transparent GIF pixel) to let each server show how good it is at parsing client requests and building a reply (otherwise you are testing the file system and the speed of your disk – or the Nginx cache).

To make the G-WAN /nop.gif URI work with Nginx add this to nginx.conf: location = /nop.gif { empty_gif; }

But the kernel is also caching files in memory, right? That's true, but as compared to G-WAN's caching the kernel is more than twice slower at the task (for small files), hence the value of benchmarking resources embedded in the HTTP server.

Is it right to test such a cornersome case? After all, web servers also have to deal with large static files.

First, as an application server, G-WAN generates dynamic contents in-memory. That's far from being a corner case: this is the primary purpose of of G-WAN – and many served contents are personalized, even for mere Web servers.

Second, the G-WAN cache will turn disk-based resources as embedded resources. This too, can be done for many small files. The G-WAN Key/Value store can also be used as a dynamic cache to accelerate database applications, or other computer intensive calculations like loan amortissement.

Third, G-WAN is also faster than Nginx when serving large files. Here, we are just explaining how much faster G-WAN will be for small files and embedded resources because, heck, the purpose of these benchmarks is to compare the web servers (rather than different OS kernels, File Systems, types of Disks, Network Interface Cards, Switches, etc. which, by careful selection, may be used to either serve as a bottleneck or to demonstrate the superiority of a given web server).

HTTP options

For the same reason, HTTP Keep-Alives should be used to test Web servers: establishing new TCP connections is very slow – and this is done (again) by the OS kernel rather than by the Web server. When you create many new connections per second, you test the OS kernel, not the user-mode server application.

Further, modern Web applications heavily rely on HTTP Keep-Alives, making them more than relevant on today's Web 2.0 with AJAX, Comet, online Games, video streaming, Big Data, HPC, HF Trading, etc.

HTTP Protocol version

HTTP/1.1 brings HTTP Keep-Alives to avoid having to establish a new TCP connection for each HTTP request. This is a useful evolution of HTTP/0.9 which lacks many other features.

Comparing servers that use different versions is increasingly irrelevant because HTTP/2 offers even more performance-oriented features like HTTP header compression, the support of bundled resources, multiplexing, request prioritization, native (un-encoded) binary contents, and streaming.

Know what you compare. Make sure you are using the same protocol version in all the servers.

OS kernel limits

In our G-WAN-based private Clouds, we are using a recompiled kernel which critical parts have been re-written to deliver much higher performance (it is most likely that the largest datacenters have done similar things to cut their hardware, electricity, floor space, and cooling costs).

With G-WAN, this custom kernel is 30x-50x times faster than the official kernel. But other HTTP servers don't get such a boost because their usermode code is the bottleneck rather than the OS kernel.

Make sure you are using the same OS version and configuration for all the HTTP servers you compare.

Hardware limits

Finally, even with a small static 100-byte file and HTTP keep-Alives, most of the time is consumed by the CPU address bus saturation due to broadcast snoops. That, recognized Intel R&D, is the next bottleneck to address.

Future multi-Core CPUs will only make things better for G-WAN and worse for all others because G-WAN has been designed to scale vertically before scaling horizontally.

Multi-Core CPUs

In year 2000, Intel shipped the last single-Core (mainstream) CPU, the Pentium 4. All its successors have been multi-Core CPUs, making single-Core CPUs obsolete (in servers, desktops, tablets, smartphones, routers, etc.).

In the past, CPUs were faster by using a faster clock frequency. But at 4GHz vendors told us that heat dissipation problems became unmanageable at reasonable costs. To continue delivering more power, CPUs started to embed several small CPUs (the CPU 'Cores') printed at a lower scale.

Programs that do not exploit the new CPU Cores will not run much faster on new CPUs. Established software vendors face a serious challenge because their product lines were designed at a time parallelism was not a concern on mass-market PCs and servers.

Around 2020, Moore's law will collapse as transistors reach the size of an atom, making it impossible to stack more Cores in CPUs. Then, making more powerful CPUs will require to break the laws of today's known physic. In the meantime, writing more efficient software (and enlarging CPU caches) are the only way to make computers run faster.

To test SMP (Symmetric Multi-Processing, called "hyperthreading" for Intel) or CMP (Chip Multi-Processing, called "multicore") server software, that is, software that takes advantage of logical processors able to run threads in parallel, you need to use CPUs with many Cores (and use as many workers on the client and server sides).

On Intel platforms, SMP can lead to a 30% performance gain (in the relatively rare cases where memory latencies can be avoided when interleaving the two threads' instructions in the pipeline) while CMP theorically delivers 100% of the performance (this is moderated by factors like cache coherency and access to system memory, which is much slower than CPU caches).

SMP/CMP Web servers can either use several processes (like Nginx) or one process and several threads (like G-WAN).

Our tests show that using one single process and several threads saves both CPU and RAM – and delivers better performance – because this architecture spares the need for redundant plumbing protected by locks and duplicated resources.

Not all 3.0 GHz CPUs are Equal

All our single-socket 6-Core tests are made with this Mac Pro CPU (identified as follows in the gwan.log file):

Intel(R) Xeon(R) CPU W3680 @ 3.33GHz (6 Cores/CPU, 2 threads/Core)

We use two of such machines to make the LAN-based tests.

But (extensive) third-party CPU tests show that many same-frequency CPUs are not as fast (in year 2012, some same-frequency CPUs are 5 times slower and a few others are 1.5 times faster). You can identify your CPU here.

For example, this 2011 8-Core

AMD CPU @ 4.2GHz is slower than our

2010 6-Core Xeon W3680 @ 3.33GHz.

You need this 2011 16-Core

AMD CPU @ 2.1GHz to be as powerful as our

2010 6-Core Xeon W3680 @ 3.33GHz.

But even this 2012 4-Core

Intel CPU @ 1.8GHz is 3x slower than our

2010 6-Core Xeon W3680 @ 3.33GHz.

Moral of the story, our "3.33 GHz Xeon CPU" is 20 times faster than a "3.06 GHz Xeon CPU". The number of CPU Cores or its frequency does not always reflect the power of the CPU. The exact CPU reference (stored by G-WAN in the gwan.log file) is needed to really identify the test platform.

And the same kind of approximations (or omissions) in the testbed environment (OS type and configuration, hardware, drivers, network devices) lead to similar inaccuracies, making it impossible to valid or understand undocumented results.

Linux Distribution and Release

Use a 64-bit Linux distribution. Even when G-WAN runs as a 32-bit process, a 64-bit kernel works twice faster than a 32-bit kernel. This is what our tests have shown those last two years, and this is probably the easiest way for you to save on hardware and energy consumption – if you really need that much performance.

Note that Ubuntu 10.10 LTS 64-bit is more faster and scalable than Ubuntu 12.04 LTS 64-bit (which uses twice as much kernel CPU time for serving less requests, as easily seen with our ab.c benchmarking tool described below).

It means that newer kernel and LIBC releases are not necessarily better in terms of performance. There's no substitute to tests for insights that you can rely on.

Also note that, on linux 64-bit, G-WAN 32-bit is slightly faster than G-WAN 64-bit, proof that the advice above, while valid for the Linux OS kernel, is not an absolute rule valid for all software. You may want to use G-WAN 32-bit if you do not need to use more than 2 GiB of RAM (the theoric limit borders 2.5 GiB, as the kernel allocates some virtual addressing space for its own needs).

Firewalls, Packet Statistic or Packet Filtering (IP TABLES, PCAP, etc.)

IP TABLES are very expensive in terms of CPU because they apply many rules to each packet traveling on the network. Even statefull firewalls have to keep track of established connections – and this takes time – delaying network traffic.

As a typical IP TABLES configuration will divide G-WAN performances by a factor two, performance tests cannot seriously be done without either disclosing the fact that IP TABLES are used (and how), or even better, without disabling this bottleneck for the duration of the tests – and for all the compared Web servers.

System configuration

If you use a default OS installation then not all the resources of your hardware can be used: the default settings may be designed for client needs, or to save resources, but they set a limit to what can be done. Therefore, you must do some tuning to let applications and the OS kernel fully use the hardware.

The first issue is the lack of file descriptors (the default is only 1,024 files by process, resulting in very poor performance).

The second issue is the lack of TCP port numbers. As it takes time to fully close connections lingering in the TIME_WAIT state, the number of available ports will quickly decrease and establishing new connections will not be possible until they are released by the system. The default client port range is [1,024 - 5,000] and must be extended to the whole [1,024 - 65,535] ephemeral port range.

Not doing so, you will quickly hit the TIME_WAIT state and the AB, HTTPerf or Weighttp tools will produce errors like:

"error: connect() failed: Cannot assign requested address (99)"

To avoid these issues and improve general performance, you have to change the following system options:

ulimit -aH (this gives your limit) sudo sh -c ulimit -HSn 200000 (this setups your limit)

To make the following options permanent (available after a reboot) you must edit a couple of system configuration files:

Edit the file /etc/security/limits.conf:

sudo gedit /etc/security/limits.conf

And add the values below:

* soft nofile 200000 * hard nofile 200000

Edit the file /etc/sysctl.conf:

sudo gedit /etc/sysctl.conf

And add the values below:

# "Performance Scalability of a Multi-Core Web Server", Nov 2007 # Bryan Veal and Annie Foong, Intel Corporation, Page 4/10 fs.file-max = 5000000 net.core.netdev_max_backlog = 400000 net.core.optmem_max = 10000000 net.core.rmem_default = 10000000 net.core.rmem_max = 10000000 net.core.somaxconn = 100000 net.core.wmem_default = 10000000 net.core.wmem_max = 10000000 net.ipv4.conf.all.rp_filter = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.ip_local_port_range = 1024 65535 net.ipv4.tcp_congestion_control = bic net.ipv4.tcp_ecn = 0 net.ipv4.tcp_max_syn_backlog = 12000 net.ipv4.tcp_max_tw_buckets = 2000000 net.ipv4.tcp_mem = 30000000 30000000 30000000 net.ipv4.tcp_rmem = 30000000 30000000 30000000 net.ipv4.tcp_sack = 1 net.ipv4.tcp_syncookies = 0 net.ipv4.tcp_timestamps = 1 net.ipv4.tcp_wmem = 30000000 30000000 30000000 # optionally, avoid TIME_WAIT states on localhost no-HTTP Keep-Alive tests: # "error: connect() failed: Cannot assign requested address (99)" # On Linux, the 2MSL time is hardcoded to 60 seconds in /include/net/tcp.h: # #define TCP_TIMEWAIT_LEN (60*HZ) # The option below is safe to use: net.ipv4.tcp_tw_reuse = 1 # The option below lets you reduce TIME_WAITs further # but this option is for benchmarks, NOT for production (NAT issues) net.ipv4.tcp_tw_recycle = 1

Then save the file and then make the system reload it:

sudo sysctl -p /etc/sysctl.conf

The options above are important because values that are too low just block benchmarks. You will find other options in the ab.c wrapper described below.

If enabled, SELinux may prevent G-WAN from raising the number of file descriptors. If this is the case, apply the following SELinux module:

/usr/sbin/semodule -DB service auditd restart service gwan restart grep gwan /var/log/audit/audit.log | audit2allow -M gwan_maxfds semodule -i gwan_maxfds.pp service gwan start Starting gwan: [ OK ] /usr/sbin/semodule -B

The number of file descriptors used by G-WAN can be found in /proc:

cat /proc/`ps ax | grep gwan | grep -v grep | awk -F " " '{print $1}'`

/limits | grep "Max open files"

Max open files 2048 2048 files

This is good for a one-time check, but don't use the above command to constantly monitor G-WAN, use the more efficient ab.c program described below.

Virtualization (Hypervisors)

Virtualization is another hardware abstraction layer on the top of the OS kernel (which, to avoid more bugs, additional critical security holes and further loss of performance, is the only abstraction layer that we should be running on any given machine).

And it is not only slower – it also has a completely different performance profile because everything is encapsulated with new code (for example, memory allocation is notoriously atrociously damaged by virtualization, even further than all other tasks).

One area where hypervisors are a notorious nuisance is in the (altered) detection of the CPU topology (number of CPUs, Cores and Threads per Core).

For a mysterious reason, hypervisors feel the need to corrupt the return values of the CPU CPUID instruction and the Linux kernel /proc/cpuinfo structure. Both were designed for the sole purpose of letting multi-threaded applications like G-WAN scale on multicore systems.

Some Linux distributions have recently innovated in this matter by also altering what the CPU CPUID instruction returns.

Of course, the broken CPU topology detection won't affect single-threaded servers like Nginx or Apache but this will make G-WAN underperform, by a factor two or more. Even more sneakily, the ab.c test tool described here will also be affected – but only for multi-threaded servers like G-WAN.

So, instead of having the OS kernel as the bottleneck (like on a normal machine), then you have a (much) slower 'virtual machine' as the new bottleneck (see "Multi-Core scaling in a virtualized environment").

Unsurprisingly, if the speed is limited to 30km/h, then even a sports car will not 'run faster' than a bicycle.

Beware what you are testing.

The TCP/IP stack needs to be warmed-up

If you run the same AB (Apache Benchmark) test twice then you don't get the same results. Why?

Because the TCP/IP stack works in a conservative manner: it starts slowly and gradually augments its speed as the traffic grows. When a new TCP connection is created, there is no way to know if the server is capable to read as fast as the client can send.

So the client has to send some data, and then wait the server confirmation that all was received. Then it can slowly augment its speed until the receiving side does not cope (either the server or the network is overwhelmed or the traffic is rerouted to a slower/longer PATH). As a result, one small shot does not give enough time for the stack to reach its optimal state – and such a test will hide how fast a server is and how well it can cope with a growing load.

This is why small isolated AB tests are so variable and so much lower in relevance than longer tests. And since a test needs to last longer to be relevant, why not use this time to check how the tested web server (or web application) is behaving as the number of concurrent users is growing? Why not check how much RAM and CPU resources the application and the system are consuming?

Here you will see how to do all this – with one single command.

Caching vs. non-Caching

The very existence of G-WAN has created a debate because its in-memory caches (for static and dynamic contents), when enabled, more than double its performance (by removing the disk I/O bottleneck that... the OS kernel caching is supposed to wave).

With G-WAN's goal being scalability, if an OS feature does not deliver the promised benefits, then G-WAN feels a duty to fill the gap. The question to ask is why mainstream servers did not do it before.

Other servers stated that it was not fair to compare (G-WAN + cache) to Nginx or Apache2 (despite the fact that they also employ diverse caching strategies – albeit much slower, like a memcached server or locally cached file blocks and metadata (opened file descriptors, file size, etc.).

Why hidden caching strategies would be more legitimate than publicly disclosed ones (unlike G-WAN tests, many Web server benchmarks do not document their system tweaking and configuration files) is another interesting question.

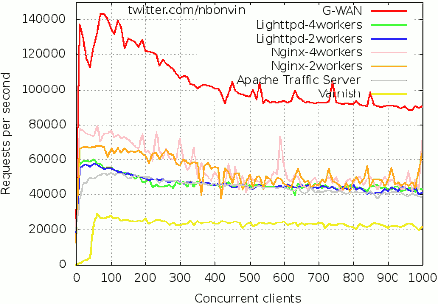

Further, if dedicated cache servers like Apache Traffic Server (ATS) or Varnish are widely compared to Web servers like Nginx or Apache2 then rejecting the same test for G-WAN is... just not fair.

Since version 4.7 G-WAN has made it possible to disable its caches, and since v4.10 it disables caching by default (see the gwan/init.c file). This way, users can check that G-WAN is faster than others, whether caching is used or not.

Not all Benchmark Tools are created equal

As we have seen, one recurring issue with performance tests is the very relevance of the test. But one of the most irrelevant ways to distort a test is to use a benchmarking client which is slower than the tested server.

A server cannot send answers faster than it receives queries.

A surprisingly high number of recent "performance tests" insist to use completely irrelevant benchmark tools, either because they are obsolete or because their architecture and implementation cannot match the performance of the tested servers.

Using a slow (single-thread, and/or Java, Python, Ruby, etc.) benchmark tool makes it possible to "demonstrate" that all the tested servers have similar performances because what is tested here is no longer the server but rather the client tool.

Below, we will discuss the most widely known benchmarking tools and their relevance.

IBM - ApacheBench (AB)

To install ApacheBench:

sudo apt-get -y install apache2-utils

Basic usage (ab -h for more options):

ab -n 100000 -c 100 -t 1 -k "http://127.0.0.1:8080/100.html"

- n ........ number of HTTP requests

- c ........ number of concurrent connections

- k ........ enable HTTP keep-alives

- t ......... number of seconds of the test

AB is reliable, simple to understand and easy to use. It's only defect is a relatively high CPU usage, and its inability to put under pressure SMP (Symmetric Multi-Processing, called "hyperthreading" for Intel) or CMP (Chip Multi-Processing, called "multicore") servers which use several worker threads.

This is because AB (like many others including Siege) uses one single thread, and an outdated event polling method. AB was made at a time CPU Cores did not exist (before 2001) – and this now makes mostly AB irrelevant to test the load of a modern multi-threaded server.

Knowing this, single-threaded servers usually use AB to compare themselves to multi-threaded servers. This is because they are much slower with multi-threaded clients like Weighttp presented below. See our comparison of AB and weighttp benchmarks.

Lighttpd - Weighttp (WG)

Like IBM AB, Weighttp has been written by Web server authors – probably because they felt the (real) gap for a serious HTTP stress tool able to test modern multi-Core CPUs. To install Weighttp:

wget http://github.com/lighttpd/weighttp/zipball/master unzip lighttpd-weighttp-v0.2-6-g1bdbe40.zip cd lighttpd-weighttp-v0.2-6-g1bdbe40 sudo apt-get install libev gcc -g2 -O2 -DVERSION='"123"' src/*.c -o weighttp -lev -lpthread sudo cp ./weighttp /usr/local/bin

Basic usage (weighttp -h for more options):

weighttp -n 100000 -c 100 -t 4 -k "http://127.0.0.1:8080/100.html"

- n ........ number of HTTP requests

- c ........ number of concurrent connections (default: 1)

- k ........ enable HTTP keep-alives (default: none)

- t ......... number of threads of the test (default: 1, use one thread per CPU Core)

Based on epoll on Linux, Weighttp is much faster than AB – even with one single thread. But its real value is when you are using as many threads/processes as you have CPU Cores on the server you target because THIS IS THE ONLY WAY TO REALLY TEST A SMP/CMP SERVER (that is, a server using several worker threads attached to logical processors – by default G-WAN uses one thread per CPU Core).

With Weighttp being so fast, you will almost certainly hit the TIME_WAIT state wall (see the TIME_WAIT fix above in the "System Configuration" paragraph).

Weighttp is by far the best stress tool we know today: it uses the clean AB interface and works reasonably well. It could be made even faster by using leaner code, but there are not many serious coders investing their time to write decent client tools, it seems.

Hewlett Packard - HTTPerf

Basic usage (httperf -h for more options):

httperf --server=127.0.0.1 --port=8080 --rate=100 --num-conns=100 --num-calls=100000 --timeout=5 --hog --uri=/100.html

Yes, HTTPerf is more complex than AB. This is visible at first glance in its syntax.

And HTTPerf does not let you specify the concurrency rate, nor the duration of the test:

- num-call ........... number of HTTP requests per connection (> 1 for keep-alives)

- num-conn ........ total number of connections to create

- rate ................... number of connections to start per second

If we want 100,000 HTTP requests, we have to calculate how many '--num-conn' and '--num-call' we will have to to specify to get a given '--rate':

nbr_req = rate * num-call

'num-conn' makes it last longer, but to get any given 'rate' 'num-conn' must always be >= to 'rate'.

HTTPerf takes great care to create new connections progressively and it only collects statistics after 5 seconds. This was probably done to 'warm-up' servers that have problems with 'cold' starts and memory allocation.

Removing this useful information from benchmark tests makes them NOT reflect reality (where clients send requests on short but intense bursts).

Also, HTTPerf's pointlessly long shots for each test make the TIME_WAIT state become a problem (see the TIME_WAIT fix above in the "System Configuration" paragraph).

Finally, HTTPerf cannot test client concurrency accurately: if rate=1 but num-conn=2 and num-call=100000 then you are more than likely to end with concurrent connections (despite the rate=1) because not all HTTP requests will be processed when the second connection is launched.

And if you use a smaller num-call value then you are testing the TCP/IP stack (creating TCP/IP connections is slow and this is done by the kernel, not by the user-mode HTTP server or Web application that you want to test).

As a result, HTTPerf can only be reliably used without HTTP Keep-Alives (with num-call=1). And even in this case, we have found ApacheBench (and even more weighttp) to be a far better proposition.

The (long) story of Nginx's "wrk"

We have discovered wrk very late (5 years after G-WAN's first release), after it was mentioned in a stackoverflow question about G-WAN. wrk was written in late 2012 according to GifHub, and it is presented on various Web sites as "the benchmark tool written for Nginx". The fact that its HTTP parser is borrowed from Nginx's source code confirms this statement.

Usage: wrk <options> <url> Options: -c, --connections <N> Connections to keep open -d, --duration <T> of test -t, --threads <N> of threads to use

In contrast with AB or weighttp, wrk creates all TCP connections first, and, then only, it sends HTTP requests.

There's a "benchmark" where Nginx is presented as processing 500,000 requests/second and later even 1 million RPS, with a version of wrk modified to support pipelining (oddly, a feature removed from wrk after this test).

This test, using a server with two 6-Core CPUs, did not take into account the ability of Nginx to accept or close TCP many connections quickly: they all were pre-established.

In contrast, with half the CPUs and CPU Cores, G-WAN achives 850,000 requests per second... TCP handshakes included!

Under common circumstances wrk is biasing HTTP benchmarks because:

- real Web users send an HTTP request immediately after the TCP handshake;

- wrk masks the ability (or the inability) of a server to quickly accept and close connections;

- G-WAN's DoS shield cuts established TCP connections that wait too long before providing HTTP requests.

If you use wrk with a duration (-d) exceeding G-WAN's timeout, or with so many connections (-c) that establishing them exceeds G-WAN's timeout, then G-WAN will cut most of those idle connections because, missing an HTTP request, they look like a Denial of Service (DoS) attack. As wrk reports those connection closes as "I/O errors", some users incorrectly concluded that G-WAN could not cope with the same load that Nginx handles (...if configured with the proper options).

But if you stay below G-WAN I/O timeouts then G-WAN passes the wrk test with better results than Nginx (nginx.conf):

wrk -d 5 -t6 -c N "http://127.0.0.1:8080/nop.gif"

┌───────────────────────┬───────────────────────┐

│ │ │

│ G-WAN │ NGINX │

│ │ │

┌────────┼────────────┬──────────┼────────────┬──────────┤

│ │ │ │ │ │

│ N │ RPS │ IO Rate │ RPS │ IO Rate │

│ │ │ │ │ │

├────────┼────────────┼──────────┼────────────┼──────────┤

│ │ │ │ │ │

│ 100 │ 668,884.20 │ 188.18MB │ 101,627.86 │ 22.78MB │

│ │ │ │ │ │

├────────┼────────────┼──────────┼────────────┼──────────┤

│ │ │ │ │ │

│ 1k │ 648,054.56 │ 182.32MB │ 314,922.46 │ 70.58MB │

│ │ │ │ │ │

├────────┼────────────┼──────────┼────────────┼──────────┤

│ │ │ │ │ │

│ 10k │ 456,831.62 │ 128.52MB │ 301,124.29 │ 67.49MB │

│ │ │ │ │ │

├────────┼────────────┼──────────┼────────────┼──────────┤

│ │ │ │ │ │

│ 100k │ 141.91 │ 40.88KB │ 4.04 │ 0.93KB │

│ │ │ │ │ │

└────────┴────────────┴──────────┴────────────┴──────────┘

Here G-WAN starts with a 2.2 MB footprint while nginx (which needs settings for heavy loads to pass this test) starts with a 454.73MB memory footprint (see the next table for more details).

Nginx pre-allocates buffers before receiving the first connection, so you have to make room for many connections in the nginx.conf file.

As opposed to fixed config file options, G-WAN adaptive values let the resources to be allocated dynamically, on a per-need basis.

The last case above, at 100,000 connections, is purposely pathologic to see how wrk copes with an impossible mission: at best there are 65,535 - 1,024 = 64,511 ports available on localhost while the client and the server are supposed to use 100,000 ports each in order to establish 100,000 connections (as each TCP connection needs two ports, binding the client and the server on different IP addresses would help).

Now, let's do something more difficult, with connections series that grow by 100k clients, up to 1 million of concurrent clients:

./abc [0-1m:100k+100kx1] "127.0.0.1:8080/nop.gif"

G-WAN CPU RAM

-------- -------- --------------- ------------- --------

Clients RPS user kernel SRV MB SYS MB Time

-------- -------- ------- ------- ------ ------ --------

1, 74348, 92, 325, 2.21, 0.0, 10:29:28

100000, 31161, 104, 484, 29.55, 0.6, 10:29:31

200000, 103890, 201, 735, 29.68, 0.1, 10:29:32

300000, 29715, 93, 321, 38.07, 0.7, 10:29:35

400000, 105434, 212, 739, 38.07, 0.2, 10:29:36

500000, 25985, 105, 314, 51.91, 0.7, 10:29:40

600000, 97000, 190, 689, 51.91, 0.2, 10:29:41

700000, 25271, 89, 336, 53.07, 0.8, 10:29:45

800000, 31639, 269, 670, 53.33, 0.0, 10:29:49

900000, 25621, 138, 410, 64.34, 0.8, 10:29:52

1000000, 84426, 279, 699, 64.34, 0.4, 10:29:54

----------------------------------------------------------

Total RPS:634,490 Time:29 second(s) [00:00:29]

----------------------------------------------------------

NGINX CPU RAM

-------- -------- --------------- ------------- --------

Clients RPS user kernel SRV MB SYS MB Time

-------- -------- ------- ------- ------ ------ --------

1, 27275, 29, 106, 567.67, 0.0, 10:43:16

100000, 29917, 135, 344, 596.06, 0.8, 10:43:19

200000, 62279, 81, 199, 587.53, 0.1, 10:43:21

300000, 30057, 95, 285, 606.52, 0.9, 10:43:24

400000, 68045, 74, 174, 591.85, 0.2, 10:43:25

500000, 28827, 99, 203, 596.98, 0.9, 10:43:29

600000, 62716, 75, 196, 595.05, 0.3, 10:43:31

700000, 28161, 96, 250, 606.65, 1.1, 10:43:34

800000, 55241, 58, 164, 586.46, 0.4, 10:43:36

900000, 27468, 117, 273, 604.63, 1.1, 10:43:40

1000000, 53484, 84, 198, 595.52, 0.5, 10:43:42

----------------------------------------------------------

Total RPS:473,470 Time:31 second(s) [00:00:31]

----------------------------------------------------------

This time, we have used weighttp and our abc wrapper to play on a concurrency range: from 0 to 1m, by increasing steps of 100k clients running 100k requests done one time.

How comes weighttp manages to go up to 1 millions of concurrent clients when wrk was dying at a mere 100,000 connections?

weighttp establishes (and closes) connections on-the-fly. This lets it achieve much higher concurrencies on localhost, albeit, at the cost of performance as we can see here.

RPS results are lower and 'shaky' because the faster a server, the faster free ports will be consumed and lack to establish new connections.

This example demonstrates how fragile the relevance of a tool or test procedure can be.

Vendors may seek to present big numbers to attract users, but numbers make sense only when people understand the nature of the test, and how much tweaking a server requires to merely pass it.

But there's one area where we have found that the particularities of wrk have a good use: that's when playing with concurrencies in the 10,000-50,000 range. Below that range, wrk is too slow, but above its pre-allocation tactic helps to scale where weighttp suffers on localhost.

To use less CPU (and be even faster) G-WAN could cache file blocks and metadata (open file descriptor, file blocks, exist/size/time/type attributes) like Nginx. A future version of G-WAN may do it. But the tests above show that, for now, without any kind of caching, G-WAN is faster than Nginx because G-WAN's architecture and implementation are better.

This matters because on modern multicore systems, server applications are CPU-bound (CPUs are typically underused, pointlessly waiting for things to be done because of the poor multicore scalability of their code). Being able to saturate a greater bandwidth by better using your CPUs lets you do more work with less machines.

At this point you may question why we do not use a LAN instead of localhost. A 1 GigaBit NIC is limited to ~100 MegaBytes per second. In the wrk test above, Nginx would not be limited: it transfered a maximum of 70 MB per second. But G-WAN would be slowed-down by a factor two(!) as it processed 188 MB per second.

Using a 1 Gigabit link in performance tests would make G-WAN look almost as slow as Nginx. That is, unless you have a 10/40/100 Gigabit LAN (with tuned OS kernels, optimized drivers, hight-end switches, etc.) available on your desk to do tests.

Let's recapitulate: weighttp will work very well until 10,000 clients and wrk, which is slower until that point, works better for further concurrencies until 50,000 (that seems to be its limit on localhost). The next 10-second test comforts our prior findings:

wrk -d 10 -c 50000 -t 6 http://localhost:8080/nop.gif

G-WAN

Running 10s test @ http://localhost:8080/nop.gif

6 threads and 50000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 52.22ms 161.94ms 882.82ms 95.60%

Req/Sec 71.61k 6.51k 92.32k 77.27%

4156389 requests in 10.02s, 1.14GB read

Socket errors: connect 0, read 0, write 0, timeout 139871

Requests/sec: 414,625.76

Transfer/sec: 116.65MB

NGINX

wrk -d 10 -c 50000 -t 6 http://localhost:8080/nop.gif

Running 10s test @ http://localhost:8080/nop.gif

6 threads and 50000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 200.72ms 267.68ms 815.53ms 79.69%

Req/Sec 39.63k 10.04k 51.73k 63.51%

2213810 requests in 10.00s, 496.14MB read

Socket errors: connect 0, read 0, write 0, timeout 15336

Requests/sec: 221,364.51

Transfer/sec: 49.61MB

At a concurrency of 50,000 clients wrk shows that G-WAN, despite having a much lower latency, is twice faster than Nginx (which uses less verbose HTTP headers than G-WAN).

There are more timeouts in proportion, but this is expected when the kernel is pushed to its limits (many more requests and a larger payload are processed by G-WAN).

Also, in all fairness, while G-WAN could be slowed-down to avoid these timeouts, Nginx is its own bottleneck, and can't do any better.

The tests above show that G-WAN is faster whatever the concurrency and the test tool (this point is important to reach a consensus: G-WAN has more ground on this area because we use the benchmark tools made by others).

As we have seen, selecting a benchmark tool is not easy as the proper choice depends on the environment (server hardware, network), the load, the type of requests (embedded resources, static file sizes, dynamic contents), and the concurrency.

Lighttpd's weighttp and Nginx's wrk have both legitimate uses and this is probably what makes benchmarking a so difficult matter: it requires a lot of time to understand how things realy work and enough honesty to recognize one's errors. After all, like many, we started to use AB, until we discovered a "better tool" with weighttp.

Comparing ApacheBench and Weighttp

We said that Weighttp is better than AB. But how far is this true? It depends on the number of CPU Cores: the more you have Cores, the faster Weighttp will be as compared to AB. AB was designed at a time when multicore did not exist:

// -------------------------------------------------------------------------------------

// ab test: 7.1 seconds and 140,680 req/s

// -------------------------------------------------------------------------------------

ab -k -n 1000000 -c 300 http://127.0.0.1:8080/100.html

Server Software: G-WAN

Server Hostname: 127.0.0.1

Server Port: 8080

Document Path: /100.html

Document Length: 100 bytes

Concurrency Level: 300

Time taken for tests: 7.108 seconds

Complete requests: 1000000

Failed requests: 0

Write errors: 0

Keep-Alive requests: 1000000

Total transferred: 377000000 bytes

HTML transferred: 100000000 bytes

Requests per second: 140680.34 [#/sec] (mean)

Time per request: 2.132 [ms] (mean)

Time per request: 0.007 [ms] (mean, across all concurrent requests)

Transfer rate: 51793.44 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 15

Processing: 0 2 0.1 2 6

Waiting: 0 2 0.1 2 6

Total: 0 2 0.1 2 17

Percentage of the requests served within a certain time (ms)

50% 2

66% 2

75% 2

80% 2

90% 2

95% 2

98% 2

99% 2

100% 17 (longest request)

// ------------------------------------------------------------------------------------- // weighttp test: 1.7 seconds and 595,305 req/s // ------------------------------------------------------------------------------------- weighttp -k -n 1000000 -c 300 -t 4 http://127.0.0.1:8080/100.html finished in 1 sec, 679 millisec and 808 microsec, 595305 req/s, 205217 kbyte/s requests: 1000000 total, 1000000 started, 1000000 done, 1000000 succeeded, 0 failed, 0 errored status codes: 1000000 2xx, 0 3xx, 0 4xx, 0 5xx traffic: 353000000 bytes total, 253000000 bytes http, 100000000 bytes data

Clearly, ApacheBench (AB) is unable to benchmark G-WAN: AB is the bottleneck with 140k requests/sec while weighttp delivers 595k requests/sec. Most of the difference comes from the 4 CPU Cores used by weighttp (4 x 140 = 560), and the remaining comes from the fact that weighttp is using a faster event-based Linux mechanism called "epoll".

Someone sent me the following bash script, claiming that AB can be made relevant on multicore with the taskset tool. The only way to see if this is the case is to test it:

1 2 3 |

for i in `seq 0 3`;do taskset -c $i ab -k -c 50 -n 1000000 'http://127.0.0.1/100.html' | grep '(mean)' &> $i.log & done |

ab -k -c 50 -n 1000000 http://127.0.0.1/100.html ... Time taken for tests: 7.094 seconds Requests per second: 140965.17 [#/sec] (mean) Time per request: 0.355 [ms] (mean)

./bench.sh ... Requests per second: 72731.63 [#/sec] (mean) Time per request: 0.687 [ms] (mean) Requests per second: 73923.53 [#/sec] (mean) Time per request: 0.676 [ms] (mean) Requests per second: 71168.06 [#/sec] (mean) Time per request: 0.703 [ms] (mean) Requests per second: 74232.14 [#/sec] (mean) Time per request: 0.674 [ms] (mean) ----------------------------------------------- Total: 292,055.36 req/sec in 18 seconds

Even with taskset, the AB client does not scale on multicore: each instance of AB executed in parallel delivers half the results of one single instance of AB. As a benchmark tool, AB is the bottleneck. It just cannot be used to test servers that scale vertically.

The ab.c wrapper for Weighttp

ab.c initially supported ApacheBench and HTTPerf, but this was making the code pointlessly unreadable so they were ditched as anyway their irrelevance is easily demonstrated. The name 'ab.c' keeps a reference to IBM 'AB', as the next step in performance measuring imposed by the 2001 multicore standard.

ab.c runs tests on the [1 - 1,000] concurrency range. It makes sense (especially if you are using >= 3 rounds for each concurrency test to have a minimum, average and maximum value at each step).

With such a long (and continuous) string of tests, you get more relevant results. A general trend can be extracted from the whole test, and each server's results curve's slope is as useful as its variability to interpret the behavior of a program:

Running weighttp 1,000 times (or more) for each server, in a continuous way, and each time with different parameters, is a tedious task (best left to computers).

The ab.c program does just that: it lets you define the URLs to test, the range, and it collects the results in a CSV file suitable for charting with LibreOffice or gnuplot (apt-get install gnuplot).

ab.c can also measure the CPU and RAM resources consumed by the web server and the system (some servers delegate a significant part of their job to the system, potentially escaping measures made for their sole process(es)).

The ab.c file can either be run by G-WAN or be compiled by GCC (see the comments at the top of the ab.c file).

If you run it with G-WAN, copy ab.c in the directory where the gwan executable is stored and run G-WAN twice (one time as a server and a second time to run the ab.c test program):

sudo ./gwan (run gwan server) ./gwan -r ab.c gwan 127.0.0.1/100.html (run ab.c [server_name] <URL>)

If you compile ab.c with gcc -O2 ab.c -o abc -lpthread then do this:

sudo ./gwan (run gwan server) ./abc gwan 127.0.0.1/100.html (run ab.c [server_name] <URL>)

This will display: (saved in "result_gwan_100.html.txt")

===============================================================================

G-WAN ApacheBench / Weighttp / HTTPerf wrapper http://gwan.ch/source/ab.c

-------------------------------------------------------------------------------

Now: Thu Sep 28 18:52:03 2013

CPU: 1 x 6-Core CPU(s) Intel(R) Xeon(R) CPU W3680 @ 3.33GHz

RAM: 6.88/7.80 (Free/Total, in GB)

OS : Linux x86_64 v#50-Ubuntu SMP Fri Mar 18 18:42:20 UTC 2011 2.6.35-28-generic

Ubuntu 10.10 \n \l

> Server 'gwan' process topology:

---------------------------------------------

6] pid:5622 Thread

5] pid:5621 Thread

4] pid:5620 Thread

3] pid:5619 Thread

2] pid:5618 Thread

1] pid:5617 Thread

0] pid:5506 Process RAM: 2.29 MB

---------------------------------------------

Total 'gwan' server footprint: 2.29 MB

/home/pierre/gwan/gwan

G-WAN 4.9.28 64-bit (Sep 28 2013 15:07:23)

weighttp -n 1000000 -c [0-1000 step:10 rounds:3] -t 6 -k "http://127.0.0.1/100.html"

=> HTTP/1.1 200 OK

Server: G-WAN

Date: Thu, 28 Sep 2013 20:52:03 GMT

Last-Modified: Thu, 13 Oct 2011 13:15:12 GMT

ETag: "1f538066-4e96e460-64"

Vary: Accept-Encoding

Accept-Ranges: bytes

Content-Type: text/html; charset=UTF-8

Content-Length: 100

Connection: close

=> XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX...

Number Requests per second CPU

of ---------------------------- ----------------

Clients min ave max user kernel MB RAM Time

-------- -------- -------- -------- ------- ------- ------- --------

1, 152678, 220251, 279337, 392, 1484, 2.32, 08:07:40

10, 283362, 301733, 318171, 474, 1568, 2.32, 08:07:50

20, 591956, 618668, 662597, 369, 1440, 2.32, 08:07:55

30, 718260, 739384, 777479, 425, 1286, 2.32, 08:07:59

40, 658536, 736343, 797682, 422, 1241, 2.32, 08:08:03

50, 775151, 788168, 800177, 378, 1274, 2.32, 08:08:07

60, 615532, 667071, 764336, 357, 1331, 2.32, 08:08:11

70, 792621, 802455, 816932, 334, 1303, 2.32, 08:08:15

80, 807515, 823596, 839406, 401, 1258, 2.32, 08:08:19

90, 756659, 797750, 824328, 343, 1300, 2.32, 08:08:22

100, 823602, 831829, 836091, 313, 1302, 2.32, 08:08:26

110, 726856, 797436, 837584, 392, 1349, 2.32, 08:08:30

120, 822744, 834193, 840570, 327, 1289, 2.32, 08:08:33

130, 828726, 840265, 846978, 418, 1220, 2.32, 08:08:37

140, 797041, 820530, 857351, 366, 1242, 2.32, 08:08:41

150, 778589, 818561, 847754, 366, 1311, 2.32, 08:08:44

160, 840447, 846376, 855455, 352, 1260, 2.32, 08:08:48

170, 840446, 844842, 848324, 366, 1243, 2.32, 08:08:51

180, 818502, 834756, 854787, 364, 1243, 2.32, 08:08:55

190, 818874, 830426, 844438, 318, 1307, 2.32, 08:08:59

200, 792267, 827353, 846006, 343, 1286, 2.32, 08:09:02

210, 719377, 800988, 846982, 980, 3549, 2.32, 08:09:06

220, 841211, 846224, 850133, 413, 1213, 2.32, 08:09:10

230, 843732, 847659, 850862, 369, 1248, 2.32, 08:09:13

240, 837850, 842696, 847033, 373, 1252, 2.32, 08:09:17

250, 840531, 846152, 849944, 364, 1260, 2.32, 08:09:20

260, 844300, 848033, 850777, 292, 1321, 2.32, 08:09:24

270, 829653, 838570, 856243, 355, 1246, 2.32, 08:09:27

280, 777657, 823413, 849086, 383, 1211, 2.32, 08:09:31

290, 840282, 843783, 849591, 320, 1297, 2.32, 08:09:35

300, 836449, 842222, 850771, 369, 1258, 2.32, 08:09:38

310, 815157, 835302, 850166, 331, 1298, 2.32, 08:09:42

320, 838998, 841898, 843357, 338, 1300, 2.32, 08:09:45

330, 829140, 835129, 841611, 331, 1304, 2.32, 08:09:49

340, 779995, 805174, 838465, 334, 1356, 2.32, 08:09:53

350, 843775, 844373, 845439, 362, 1274, 2.32, 08:09:56

360, 841599, 845898, 848848, 343, 1288, 2.32, 08:10:00

370, 793889, 824918, 843308, 369, 1297, 2.32, 08:10:03

380, 772776, 821580, 847750, 341, 1295, 2.32, 08:10:07

390, 837953, 845142, 850654, 299, 1327, 2.32, 08:10:11

400, 840662, 845963, 853726, 369, 1253, 2.32, 08:10:14

410, 803891, 831454, 845386, 331, 1302, 2.32, 08:10:18

420, 827370, 838941, 848850, 357, 1272, 2.32, 08:10:21

430, 842613, 845519, 847311, 387, 1239, 2.32, 08:10:25

440, 843442, 846206, 851032, 355, 1267, 2.32, 08:10:28

450, 785780, 823147, 845937, 385, 1252, 2.32, 08:10:32

460, 834546, 839099, 841910, 401, 1227, 2.32, 08:10:36

470, 830758, 835113, 840067, 357, 1276, 2.32, 08:10:39

480, 788556, 822908, 848298, 366, 1277, 2.32, 08:10:43

490, 833311, 841979, 848084, 371, 1253, 2.32, 08:10:46

500, 831943, 838932, 849478, 341, 1279, 2.32, 08:10:50

510, 836036, 842524, 849206, 355, 1274, 2.32, 08:10:54

520, 844894, 848526, 852083, 334, 1295, 2.32, 08:10:57

530, 840880, 847663, 853107, 299, 1330, 2.32, 08:11:01

540, 788728, 824870, 845167, 396, 1300, 2.32, 08:11:04

550, 834439, 842538, 851526, 404, 1230, 2.32, 08:11:08

560, 833872, 838739, 844909, 394, 1227, 2.32, 08:11:12

570, 831906, 839622, 846857, 371, 1253, 2.32, 08:11:15

580, 798701, 826954, 843199, 355, 1269, 2.32, 08:11:19

590, 801124, 827933, 846073, 338, 1304, 2.32, 08:11:22

600, 826464, 837761, 850053, 336, 1283, 2.32, 08:11:26

610, 817589, 830982, 838716, 344, 1325, 2.32, 08:11:30

620, 797157, 824149, 844845, 324, 1325, 2.32, 08:11:33

630, 835954, 839543, 843219, 355, 1286, 2.32, 08:11:37

640, 805020, 813326, 827024, 355, 1281, 2.32, 08:11:40

650, 727524, 798570, 836528, 401, 1246, 2.32, 08:11:44

660, 806741, 829463, 841187, 390, 1251, 2.32, 08:11:48

670, 838255, 844630, 850748, 357, 1274, 2.32, 08:11:51

680, 830290, 840271, 847011, 406, 1237, 2.32, 08:11:55

690, 843669, 845673, 846854, 338, 1290, 2.32, 08:11:59

700, 839085, 844716, 848786, 331, 1300, 2.32, 08:12:02

710, 825393, 835650, 847304, 366, 1279, 2.32, 08:12:06

720, 822331, 836362, 848265, 313, 1335, 2.32, 08:12:09

730, 841236, 844834, 848198, 322, 1318, 2.32, 08:12:13

740, 837295, 839035, 841725, 348, 1279, 2.32, 08:12:16

750, 842605, 844794, 847091, 357, 1274, 2.32, 08:12:20

760, 838908, 843304, 845683, 313, 1328, 2.32, 08:12:24

770, 833358, 836914, 838761, 348, 1269, 2.32, 08:12:27

780, 839677, 843890, 848215, 376, 1265, 2.32, 08:12:31

790, 810000, 832713, 844356, 364, 1267, 2.32, 08:12:34

800, 796423, 825282, 845784, 376, 1291, 2.32, 08:12:38

810, 828858, 837415, 845210, 380, 1248, 2.32, 08:12:42

820, 826608, 836079, 843948, 350, 1288, 2.32, 08:12:45

830, 797722, 824175, 838571, 362, 1232, 2.32, 08:12:49

840, 827289, 834943, 840495, 334, 1314, 2.32, 08:12:52

850, 829754, 837062, 840883, 348, 1295, 2.32, 08:12:56

860, 811395, 827793, 838070, 390, 1262, 2.32, 08:13:00

870, 823325, 828099, 837477, 365, 1281, 2.32, 08:13:03

880, 833904, 838703, 844646, 378, 1258, 2.32, 08:13:07

890, 823148, 832111, 839696, 362, 1295, 2.32, 08:13:10

900, 831513, 835048, 837028, 366, 1283, 2.32, 08:13:14

910, 833647, 835858, 837625, 331, 1309, 2.32, 08:13:18

920, 804111, 824672, 836163, 341, 1324, 2.32, 08:13:21

930, 821785, 827819, 833732, 348, 1307, 2.32, 08:13:25

940, 821629, 829049, 839868, 350, 1288, 2.32, 08:13:29

950, 797319, 808653, 826050, 364, 1279, 2.32, 08:13:32

960, 828641, 833717, 836952, 348, 1295, 2.32, 08:13:36

970, 817147, 825612, 831693, 338, 1293, 2.32, 08:13:40

980, 822798, 831615, 837336, 313, 1323, 2.32, 08:13:43

990, 699485, 785803, 831896, 315, 1212, 2.32, 08:13:47

1000, 715512, 774247, 823320, 352, 1179, 2.32, 08:13:51

-------------------------------------------------------------------------------

min:80528704 avg:82290105 max:83730021 Time:388 second(s) [00:06:28]

-------------------------------------------------------------------------------

CPU jiffies: user:323931 kernel:1170785 total:1494716

Why such a test? Servers can hardly be compared without all this information:

- Performance (requests per second, total elapsed time)

- Scalability (the [1-1,000] concurrency range)

- Efficiency (CPU and RAM resources)

The min, ave and max columns show the requests per second for the number of rounds (here "10") at the specified concurrency (which differs on each line).

The totals at the bottom of the min, ave and max columns show the SUMS of all the values above. Theses values are useful to quickly compare the final scores of several tests (but this is not the average per second: (a) these SUMS are amounts which are not related to the TOTAL elapsed time and (b) these SUMS do not cover the whole interval of time because we have a concurrency step and because the test itself takes time to execute, each server not being able to accept connections and process requests at the same pace).

The CPU and RAM values are amounts of Jiffies for the CPU (a unit defined by the Linux kernel, unrelated to a % of the available CPU resources) and MB for the RAM used by G-WAN.

To get a taste of what ab.c does, here

are comparisons made with this test processing 1 billion of HTTP requests:

(weighttp requests per concurrency:1 million, range:[1-1,000], concurrency step:10, concurrency rounds:10)

100.html (100-byte static file, keep-alives) G-WAN server min:80528704 avg:82290105 max:83730021 Time: 1292 seconds [00:21:32] Lighty server min:21218587 avg:21648673 max:21956268 Time: 4740 seconds [01:19:00] Nginx server min:15072297 avg:15927773 max:16797720 Time: 6823 seconds [01:53:43] Varnish cache min: 8817943 avg: 9612933 max:10399610 Time:10817 seconds [03:00:17] hello world G-WAN + C min:69718806 avg:76476595 max:80158520 Time: 1551 seconds [00:25:51] G-WAN + Java min:67829055 avg:72811610 max:75972646 Time: 1648 seconds [00:27:28] G-WAN + Scala min:67646477 avg:72637502 max:75776744 Time: 1660 seconds [00:27:40] G-WAN + JS min:68204491 avg:73775202 max:76865982 Time: 1696 seconds [00:28:16] G-WAN + Go min:69063401 avg:75203358 max:78411364 Time: 1892 seconds [00:31:32] G-WAN + Lua min:67727190 avg:72511554 max:75758859 Time: 1920 seconds [00:32:00] G-WAN + Perl min:69802019 avg:75089420 max:78208829 Time: 1977 seconds [00:32:57] G-WAN + Ruby min:69274839 avg:74538113 max:77808764 Time: 2054 seconds [00:34:14] G-WAN + Python min:69158531 avg:74223281 max:77418044 Time: 2110 seconds [00:35:10] G-WAN + PHP min:56242039 avg:59764709 max:61338987 Time: 2212 seconds [00:36:52] Tomcat min: 5715150 avg: 6709361 max: 7655606 Time:20312 seconds [05:38:32] Node.js min: 1239556 avg: 1336105 max: 1420920 Time:80102 seconds [22:15:02] Google Go min: 1148172 avg: 1208407 max: 1280151 Time:84811 seconds [23:33:31]

Those results are sorted by order of performance (shortest elapsed time for the whole test). Divide the main/avg/max numbers by 100 (they are the SUM of 100 values) to have an estimation of the average RPS on the whole [1-1,000] concurrency range.

Note that, depending on the server being tested, a test can take several weeks. This is usually correlated to the RPS, but other factors enter into account like the ability for server processes to accept connections on-the-fly (some are filling a backlog).

How to Spot "Creative Accounting" (aka: Fake Tests, F.U.D., etc.)

That's easier than anticipated – for three reasons: (a) there's no real work nor skills behind FUD campaigns, (b) to stay anonymous their authors often use new accounts, unknown nicknames or young guys desperate to find a job rather than seasoned experts (understandably unwilling to be ashamed), and (c) "influencing the minds" only needs to focus on volume (you have to "occupy the space") rather than care about correctness:

"It is easier to believe a lie that one has heard a thousand times than to

believe a fact that one has never heard before."

– Robert Lynd

Here is a quick check-list:

- the environment is not correctly documented (system, hardware, server configuration, etc. see above)

- on their 2013 6/8-Core CPU(s) G-WAN is slower than (or just as fast as) on a 2006 Core2 Duo (very common)

- the benchmark tool has been chosen for its irrelevance (it's the bottleneck, it's pre-establishing connections, etc.)

- "Server X" is much faster than G-WAN... but "Server X" will die in pain with the loan.c test (despite G-WAN using a script).

- the published figures target a very narrow concurrency range rather than

G-WAN's [0-1,000] or even [0-1,000,000] tests...

and/or they just carefully avoid any resemblance to reality (sadly, outright plain lies are often the most widely published).

Again, an easy-to-check criteria is the extraordinary volume of copies of some given "neutral tests" or hate blog posts: if the authors are not part of a well-funded FUD campaign, how is it possible for their prose to be duplicated on hundreds of Web sites and to be "validated" by a chain of comments using anonymous accounts and fake identities?

Logic dictates that, the more you see FUD against someone, the more the target is a threat to the business of the FUD sponsors.

Conclusion

The fact that benchmarking tools do not tell you how to make successful tests should raise some questions. Like the fact that Web/Proxy server vendors rarely make extensive comparative benchmarks – and forget to document them when they bother to publish their tests.

When facing a choice in server technologies, do your homework! At least now you know how to proceed.